Ello.

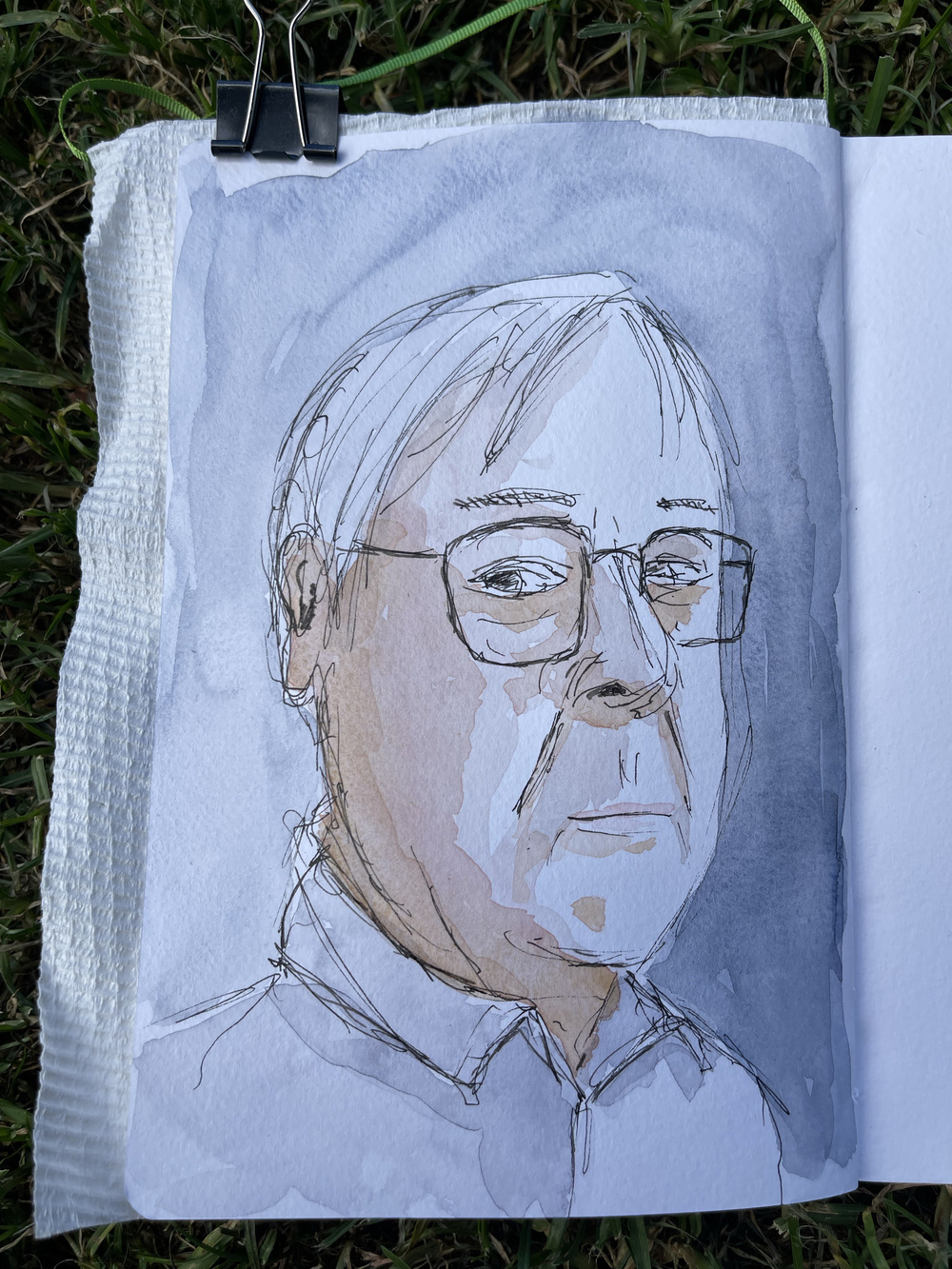

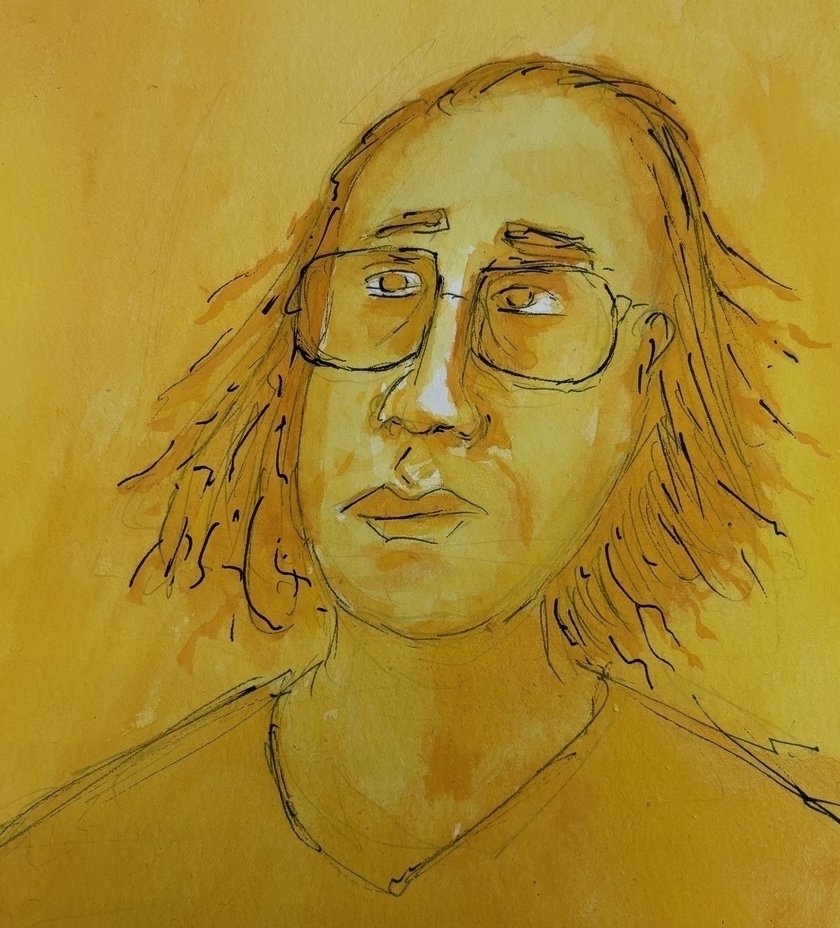

My name is Josh. I'm a learner and scribbler.

This site is relatively ancient (I started it in high school) and has been through many platforms and other iterations. A bunch of links etc. are busted.

It is now mostly a feed of things I've made, done or posted elsewhere.

Some current favourites:

(This is automated and updates once daily)